Create a free profile to get unlimited access to exclusive videos, sweepstakes, and more!

Trash-talking robot proves humans can get really offended by droids

If someone on the internet was constantly calling you a noob while you were trying to storm a dungeon in World of Warcraft, do you think you’d end up more vulnerable to attack? Would it matter if that someone wasn’t even human but a robot programmed to spit out insults?

Think about it. You know you’re getting verbally pounded by a (somewhat animated) inanimate object, but it still gets to you. That recently happened to a group of test subjects at Carnegie Mellon University, who went up against a talking humanoid robot while playing a game called “Guards and Treasures.” Turned out that even people who convinced themselves this thing was programmed to screw them up still had a hard time getting over the trash talk.

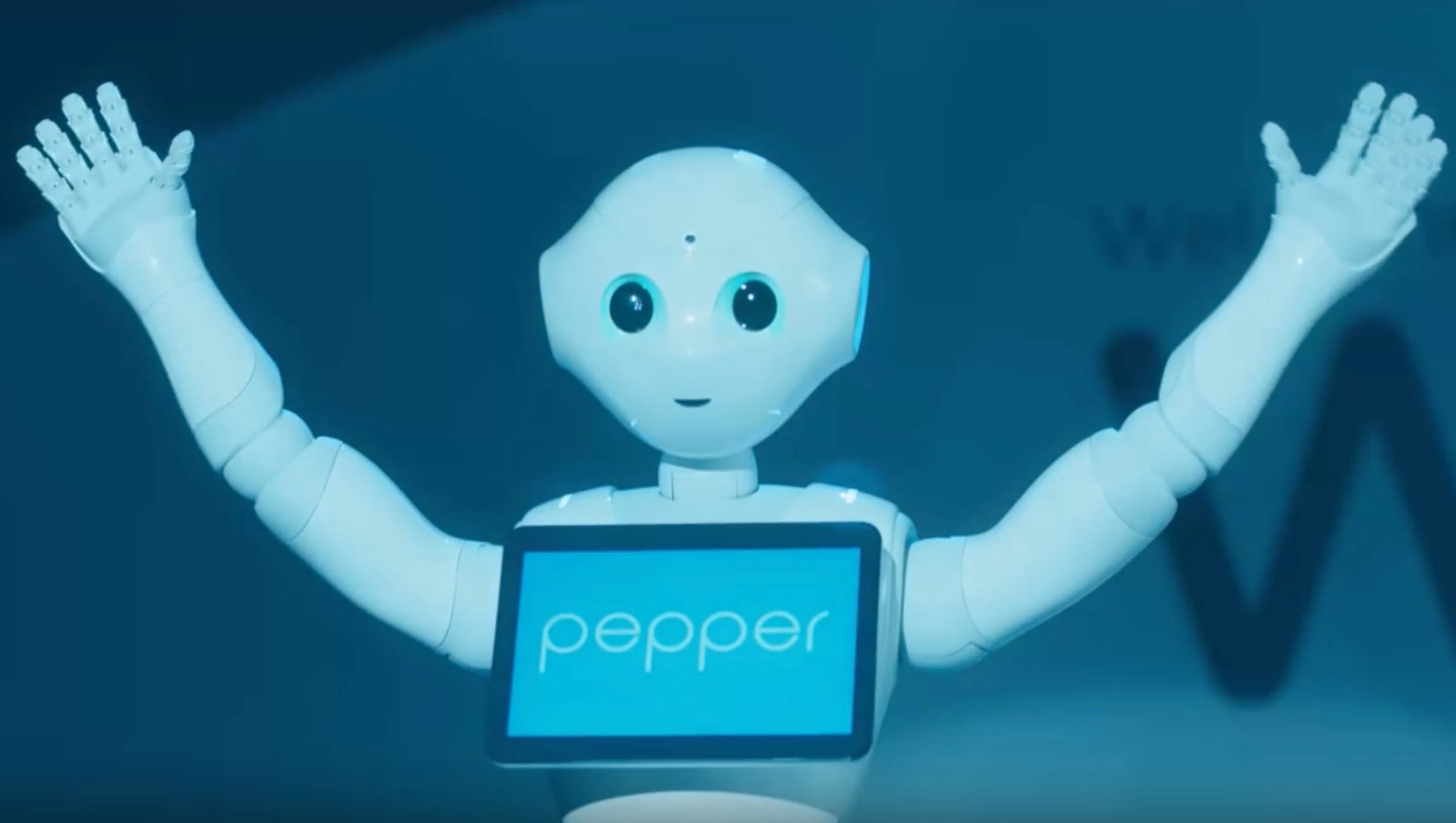

Ironically, the droid, SoftBank's Pepper robot, is kind of cute. You can even buy it. The insults were pretty lame—almost polite jabs like “I have to say you are a terrible player” and “Over the course of the game your playing has become confused”—but still managed to bring players down.

"This is one of the first studies of human-robot interaction in an environment where they are not cooperating," said Fei Fang, one of the Carnegie Mellon professors who ran the experiment. “We can expect home assistants to be cooperative, but in [other] situations …they may not have the same goals as we do."

Most human-robot studies focus on more positive relationships between man and machine, which is why this one is a deviation from the norm. It started as an extension of a project in an AI course Fang teaches at CMU’s Institute for Software Research. The students wanted to push the boundaries of game theory and bounded rationality (the idea that there are limits to how rational we can be) in our relationship with droids. Human players each challenged the robot 35 times, and despite knowing it was just a computerized piece of plastic and metal, still did worse when it insulted them.

Does this mean that those of us who fear AI taking over the world and squashing the dominion of Homo sapiens are actually justified in our dread? Not so fast. This study could actually affect the opposite, teaching us how to improve AI technologies that can be used for automated learning and treating mental health issues. The study could also influence how companion robots are designed in the future.

What we still need to find out is whether the source of the nastiness could have an effect on us. Would a talking computer box be as discouraging as something that looked remotely human? Our species is going to have to face it to find out.