Create a free profile to get unlimited access to exclusive videos, sweepstakes, and more!

USC's new script-reading AI tool can predict a film's rating in a flash

Since 1968, the Motion Picture Association of America (now the MPA) and its board of screeners has been stamping Hollywood films with official ratings that reflect the level of mature themes, sex and nudity, salty language, and depiction of violence. Its content categories exist for parents to determine what movies might not be suitable for minors and include the familiar G, PG, PG-13, R, and NC-17 ratings.

For over 50 years the MPAA has been judging Tinseltown releases based on a sliding scale of societal acceptances and cultural norms, in a process which is often time consuming and can seriously affect a film's box office potential if an undesired rating limits who can view it.

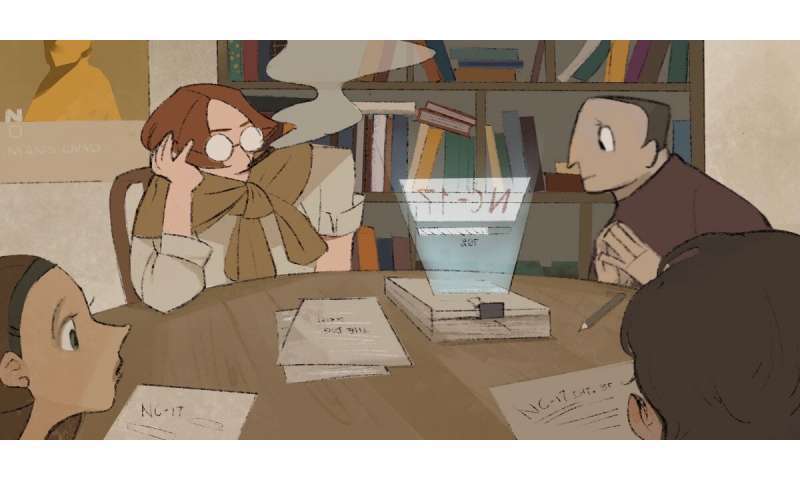

Now an artificial intelligence program designed by researchers from the USC Viterbi School of Engineering can speed that process up by rating a movie's content in seconds, all based on the final draft of the screenplay. By doing so, the AI might be able to save studios money by allowing them to alter scripts early and not require reshoots or editing room stress in post-production.

USC Professor Shrikanth Narayanan and a crew of researchers from USC Viterbi's Signal Analysis and Interpretation Lab (SAIL), have presented a system by which linguistic cues can directly signal behaviors on violent scenes, drug abuse, or sexual content depicted by the movie's characters.

"Our model looks at the movie script, rather than the actual scenes, including e.g. sounds like a gunshot or explosion that occur later in the production pipeline," explains lead study author Victor Martinez, a doctoral candidate in computer science at USC Viterbi. "This has the benefit of providing a rating long before production to help filmmakers decide e.g. the degree of violence and whether it needs to be toned down.

"There seems to be a correlation in the amount of content in a typical film focused on substance abuse and the amount of sexual content. Whether intentionally or not, filmmakers seem to match the level of substance abuse-related content with sexually explicit content. We found that filmmakers compensate for low levels of violence with joint portrayals of substance abuse and sexual content."

Their experiments gathered 992 movie scripts that included violent, substance-abuse and sexual content as judged by Common Sense Media, a nonprofit group focused on entertainment recommendations for parents and schools. Using these pre-labeled screenplays, the SAIL team was able to train artificial intelligence to quickly detect and recognize parallel risk factors, patterns, and language.

The AI's neural network then scans pages for semantics and expressions of emotion, thereby classifying sentences and phrases as either positive, negative, aggressive or related terms and placing them into categories for violence, drug abuse, and sexual content.

Narayanan's SAIL lab has been highly influential in this fascinating field of media informatics and applied natural language processing, delivering valuable storytelling awareness to the Hollywood community.

"At SAIL, we are designing technologies and tools, based on AI, for all stakeholders in this creative business—the writers, film-makers and producers—to raise awareness about the varied important details associated in telling their story on film," Narayanan said.

"Not only are we interested in the perspective of the storytellers of the narratives they weave, but also in understanding the impact on the audience and the 'take-away' from the whole experience. Tools like these will help raise societally-meaningful awareness, for example, through identifying negative stereotypes."