Create a free profile to get unlimited access to exclusive videos, sweepstakes, and more!

This VR system turns you into an NPC by moving your muscles for you

If you stop playing the game, the game plays you.

David Cronenberg’s Videodrome imagines a torturous future where technology and the body meld into a single entity. Viewers of Videodrome — the in-universe program — begin to endure intense hallucinations and become increasingly incapable of separating what’s real from what’s being fed to them by the machine.

In the real world, we’ve historically had a clear separation between our physical lives and our digital entertainment. Even when things feel emotionally real, they don’t engage with us physically. The rising popularity of virtual and augmented reality is starting to blur the lines between IRL and digital experiences, and new research is making the overlap even more concrete.

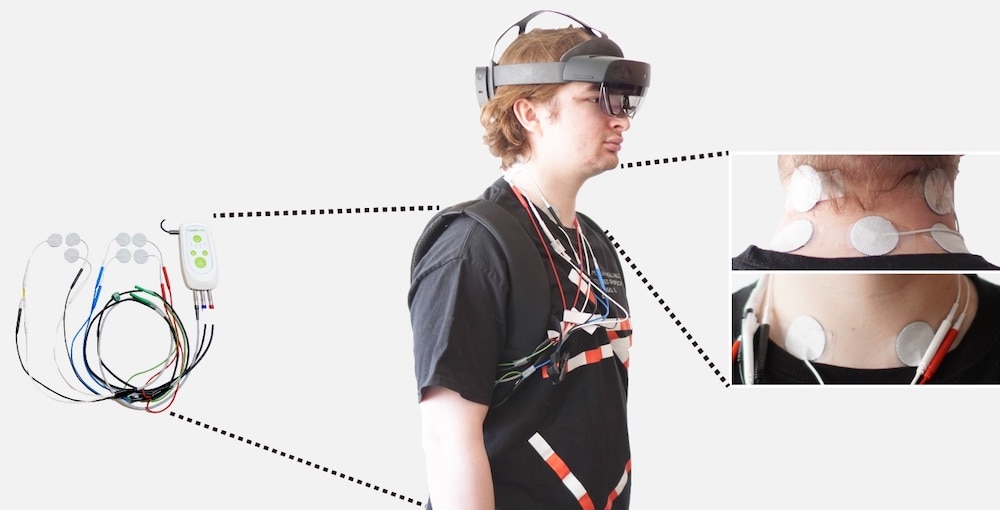

Yudai Tanaka, Pedro Lopes, and Jun Nishida from the Computer Science Department at the University of Chicago — the same lab that brought us chemical haptics — aren’t evil masterminds on a mission to control humanity, but they are creating ways for virtual reality experiences to become more tactile. In a recent paper titled Electrical Head Actuation: Enabling Interactive Systems to Directly Manipulate Head Orientation, they describe a system which uses electrical stimulation to control head movements of players in a VR environment.

The system takes well-established electrical-muscle-stimulation technology, EMS for short, typically utilized as a tool for medical rehabilitation, and uses it to control head orientation by delivering low-level shocks to the neck muscles.

“The system sends electrical pulses to the neurons that are connected to muscle fibers in the neck. When they receive the pulses, the neurons misunderstand them as motor signals and the muscle contracts. We used this principle to move the neck muscles and control orientation,” Tanaka told SYFY WIRE.

A similar technology was used by Pedro Lopes a few years back to simulate the sensation of weight when carrying virtual objects. The visual component of virtual reality is constantly improving, but exploring a virtual environment lacks verisimilitude when everything you interact with is a phantom without any tactile presence. By stimulating the muscles in the arms, Lopes was able to mimic the effects of gravity and give players the sensation that they were actually holding an object. The same basic premise is at play here, only now your actual body movements are being controlled, at least some of the time, by the game.

In demonstrations, the team used the technology to direct users toward an object, in this case a fire extinguisher during a fire safety training program. In essence, the program can sense when you are having trouble knowing where to look or what to do and sends an electrical signal which physically turns your head in the right direction. Importantly, it can also make intelligent decisions about when to send a signal in order to ensure the safety of the user. Which means even if your head jerks in response to a punch from a virtual boxing opponent, you're unlikely to be hurt.

“The system has a function that knows if the user is moving, especially against the direction of the stimulation and will immediately shut off to ensure operational safety,” Tanaka said.

Technologies like these could open up new possibilities for VR designers and content creators, allowing them to give players clear direction while maintaining the immersion of their games and environments. Traditionally, a game might put an arrow up on the screen, light up a path, or use auditory cues to point you in the right direction. In all of these cases, the content of the experience is modified in order to give you information. That has the potential to break the immersion.

“We were excited to see if there was a way to do that without changing the content. The idea is to keep it immersive and help the user find out where to go or what to look at next,” Lopes said.

Outside of gaming, a digital-muscle interface might help people learn to do physical tasks, like performing an exercise with correct form or learning to play an instrument, more easily by guiding the body through the required muscle movements. Instead of giving you visual or auditory directions, the system speaks directly to your muscles, cutting out the middle step.

“Pointing an arrow doesn’t teach your body what to do. Yudai’s system is talking directly to your body. There’s one less translational step. We don’t yet know what it means to have one less translational step, but it might be that people learn better from this physical mode of training,” Lopes said.

The possibilities of this system might even extend off Earth. While it wasn’t designed with microgravity environments in mind, EMS is commonly used in medical rehabilitation to prevent or help reverse loss of muscle mass. That’s something which is a considerable problem for astronauts living in microgravity. Spending time playing space-based VR games with direct muscle stimulation might help astronauts maintain muscle mass on their way to Mars and beyond, not to mention giving them an opportunity to get out of a cramped ship, even if only virtually.

As we move toward an increasingly virtual future, the intersection between the digital and the physical is likely to continue to grow. Let’s just hope it’s more holodeck than Videodrome.