Create a free profile to get unlimited access to exclusive videos, sweepstakes, and more!

The Universe is acting funny. Or we’re looking at it wrong.

There’s a problem with the Universe.

Or, possibly, there’s a problem with how we’re observing it. Either way, something’s fishy.

In a nutshell, the Universe is expanding. There’s a whole bunch of different ways to measure that expansion. The good news is these methods all get roughly the same number for it. The bad news is they don’t get exactly the same number. One group of methods gets one number, and another group gets another number.

This discrepancy has been around awhile, and it’s not getting better. In fact, it’s getting worse (as astronomers like to say, there’s a growing tension between the methods). The big difference between the two groups is that one set of methods looks at relatively nearby things in the Universe, and the other looks at very distant ones. Either we’re doing something wrong, or the Universe is doing something different far away than it is near here.

A new paper just published uses a clever method to measure the expansion looking at nearby galaxies, and what it finds falls right in line with the other “nearby objects” methods. Which may or may not help.

OK, backing up a wee bit… we’ve known for a century or so that the Universe is expanding. We see galaxies all moving away from us, and the farther away a galaxy is the faster it appears to be moving. As far as we can tell, there’s a tight relationship between a galaxy’s distance and how rapidly it appears to be moving away. So, say, a galaxy 1 megaparsec* (abbreviated Mpc) away may be moving away from us at 70 kilometers per second, and one twice as far (2 Mpc) is moving twice as fast (140 km/sec).

That ratio seems to hold for great distances, so we call it the Hubble Constant, or H0 (pronounced “H naught”), after Edwin Hubble who was one of the first to propose this idea. It’s measured in the weird units of kilometers per second per megaparsec (or velocity per distance — something’s moving faster if it’s farther away).

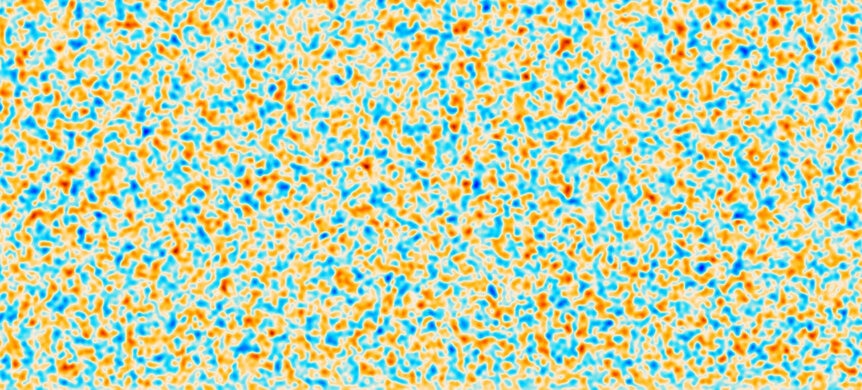

Methods that look at closer objects like stars in nearby galaxies, exploding stars, and the like get H0 to be about 73 km/sec/Mpc. But methods using more distant things like the cosmic microwave background and baryon acoustic oscillations get a smaller number, more like 68 km/sec/Mpc.

They’re close, but they’re not the same. And given the two methods all seem internally consistent, that’s an issue. What’s going on?

The new paper uses a cool method called surface brightness fluctuations. It’s a fancy name but it involves an idea that’s actually intuitive.

Imagine you’re standing at the edge of a forest, right in front of a tree. Because you’re so close, you see only one tree in your field of vision. Back up a bit and you can see more trees. Back up farther and you can see even more.

Same with galaxies. Observe a close-by one with a telescope. In a given pixel of your camera, you might see ten stars, all blurred together into that single pixel. Just due to statistics, another pixel might see 15 (it’s 50% brighter than the first pixel), another 5 (half as bright as the first one).

Now look at a galaxy that is the same in every way but twice as distant. In one pixel you might see 20 stars, and in others you see 27 and 13 (a difference of ~35%). At 10 times the distance you see 120, 105, and 90 (a difference of roughy 10%) — note I am way simplifying this here and just making up numbers as an example. The point is, the farther away a galaxy is, the smoother the distribution of brightness is (the difference between pixels gets smaller compared to the total in each pixel). Not only that, it’s smoother in a way you can measure and assign a number to.

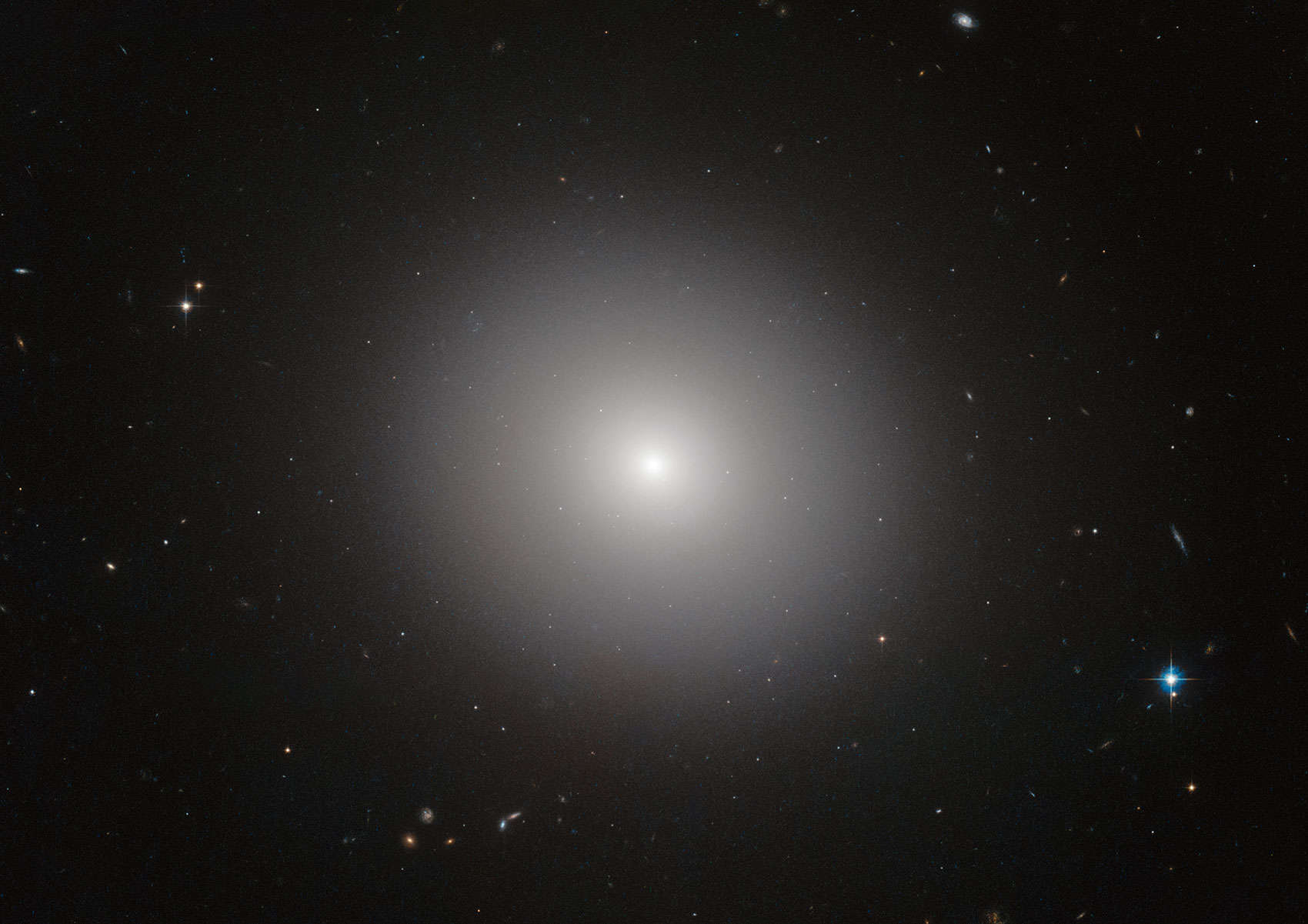

In reality it’s more complicated than that. If a galaxy is busily making stars in one section it throws off the numbers, so it’s best to look at elliptical galaxies, which haven’t made new stars in billion of years. The galaxy needs to be close enough that you can get good statistics, which limits this to ones that are maybe 300 million light years away and closer. You also have to account for dust, and background galaxies in your images, and star clusters, and how galaxies have more stars toward their centers, and... and... and...

But all these are known and fairly straightforward to correct for.

When they did all this, the number they got for H0 was (drum roll…) 73.3 km/sec/Mpc (with an uncertainty of roughly ±2 km/sec/Mpc) right in line with other nearby methods and very different than the other group using distant methods.

In a way that’s expected, but again this lends credence to the idea that we’re missing something important here.

All the methods have their issues, but their uncertainties are fairly small. Either we are really underestimating these uncertainties (always possible but a little unlikely at this point) or the Universe is behaving in a way we didn’t expect.

If I had to bet, I’d go with the latter.

Why? Because it’s done this before. The Universe is tricky. Ever since the 1990s we’ve known the expansion has deviated from being a constant. Astronomers saw that very distant exploding stars were always farther away than a simple measurement indicated, which led them to think that the Universe is expanding faster now than it used to, which in turn led to the discovery of dark energy — the mysterious entity accelerating the Universal expansion.

When we look at very distant objects we see them as they were in the past, when the Universe was younger. If the expansion rate of the Universe was different then (say 12 – 13.8 billion years ago) than it is now (less than a billion years ago) we can get two different values for H0. Or maybe different parts of the Universe are expanding at different rates.

If the expansion rate has changed that has profound implications. It means the Universe isn’t the age we think it is (we use the expansion rate to backtrack the age), which means it’s a different size, which means the time it takes for things to happen is different. It means the physical processes that happened in the early Universe happened at different times, and perhaps other processes are involved that affect the expansion rate.

So yeah, it’s a mess. Either we don’t understand how the Universe behaves well enough, or we’re not measuring it properly. Either way it’s a huge pain. And we just don’t know which it is.

This new paper makes it look even more like the discrepancy is real, and the Universe itself is to blame. But it’s not conclusive. We need to keep at this, keep hammering down the uncertainties, keep trying new methods, and hopefully at some point we’ll have enough data to point at something and say, “AHA!”

That will be an interesting day. Our understanding of the cosmos will take a big leap when that happens, and then cosmologists will have to find something else to argue about. Which they will. It’s a big place, this Universe, and there’s plenty about it to fuss over.

* A parsec is unit of length equal to 3.26 light years (or 1/12th of a Kessel). It’s a weird unit, I know, but it has a lot of historical significance and is tied to a lot of ways we measure distance. Astronomers who look at galaxies like to use the distance unit of megaparsecs, where 1 Mpc is 3.26 million light years. That’s is a little bit longer than the distance between us and the Andromeda galaxy.