Create a free profile to get unlimited access to exclusive videos, sweepstakes, and more!

Could we build a Matrix-like simulation if we wanted to?

You might be living in a false reality and not even know it.

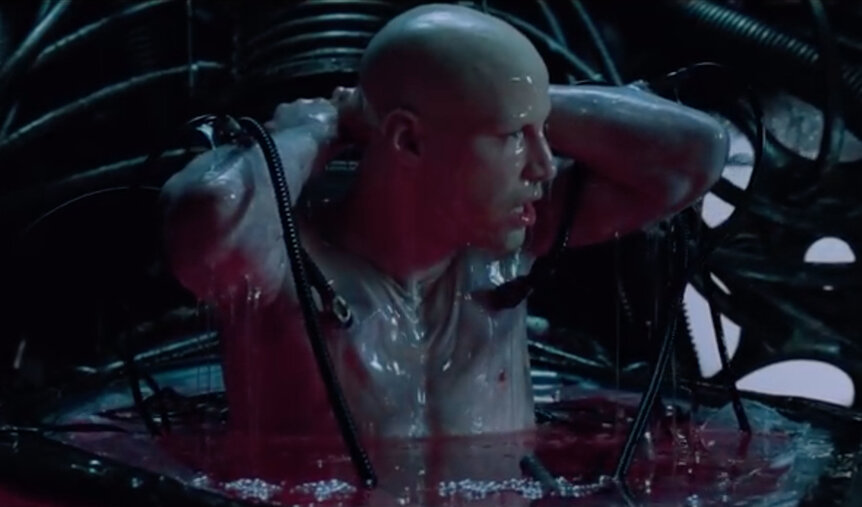

It's been more than 20 years since The Matrix first took us on a cyber-philosophical trip through truth and reality — and 20 years since bullet time first blew our collective pre-millennium minds. Now, with the release of the franchise's fourth installment, The Matrix Resurrections, many of us are visiting that virtual world and reconsidering the questions it raised.

Whether or not we're currently living in a simulation, waiting for a trench coat-clad savior to release us from our mental prison is a question of some debate within futurism circles. That debate has been beaten to death and it's likely you already have an opinion one way or another. The question on our minds, however, is whether or not we could build a Matrix-like simulation if we wanted to, now or in the near future.

The graphics and computing technology for crafting immersive open worlds is improving all the time, and they're becoming increasingly photorealistic. Supposing we can crest the uncanny valley and have the gumption to trap a few billion souls inside of a lie, what might it take to make it work? To our minds — caged in a dystopian pod of pink goo as they may be — there are two key components necessary for crafting a convincing virtual facsimile of reality.

READING YOUR PLAYERS' THOUGHTS

The Matrix only works because the machines are able to take in the thoughts and experiences of the embedded humans and feed them into the simulation. The world the machines present is merely a framework which must be inhabited by acting players.

In the films, the machines take in that information through an array of ports implanted at various spots along the body, from the base of the skull down through the body and limbs. In the real world, we have something similar, albeit more primitive.

A team from the University of Oregon trained an artificial intelligence to reconstruct faces using only the brain activity of observers. Participants were connected to an fMRI machine while looking at images of faces and their brain activity was recorded. Importantly, fMRI machines don't record the actual synaptic activity of the brain, instead it looks at changes in apparent blood flow related to stimuli.

In the first round of testing, the artificial intelligence took in the activity recorded by the brain scans and compared them to the associated faces, considering 300 mathematical points associated to physical features. This allowed it to create a sort of map connecting particular features to related blood flow in the brain.

Next, participants were shown a second set of pictures and the AI was asked to reconstruct the faces they viewed, using only the brain scans and the learned features map. The results weren't perfect. In fact, they were pretty bizarre. But if you look at the reconstructed photos long enough you start to see glimmers of the actual faces. The actual images look like deepfakes processed on a Nintendo 64, but there's something there, the beginnings of recognition. The software is able to read the thoughts, in a manner of speaking, and reconstruct brain activity. It's just that the fidelity is lower than we'd like.

Even so, if technological progress in other arenas is taken into account, we might expect these sorts of intelligences to improve drastically over time. As our ability to gather brain activity in higher definition gets better, and artificial intelligences get better at parsing it, we'll need to tackle the second challenge.

FEEDING PEOPLE FALSE INFORMATION

If you want to build a world from scratch, you must first invent a way to give people false experiences. Carl Sagan said that, or something similar. Getting to the truth of the past is difficult in the Matrix.

That becomes especially true once scientists develop a way to implant false memories or experiences into our minds, something which has been accomplished already. At least it has been, in mice.

Nearly a decade ago, two scientists at a laboratory at MIT were experimenting with mice to see if they could change their perceptions about the world around them.

The first step in that work involved identifying the neurons involved in forming memories. They accomplished this by creating genetically modified mice with light-sensitive proteins. In that way, they could observe the groupings of neurons, or engrams, associated with a particular memory. Moreover, hitting the engram with a laser by way of implants could reactivate a memory.

With that knowledge in hand, scientists were able to craft false memories in mice, specifically memories involving an electrical shock, which never actually occurred. These falsely implanted memories convinced the mice that a particular area was dangerous, triggering fear in their minds, despite there being no actual danger.

The mice, in effect, believed they'd had a prior experience that never actually happened. Their reality had been shifted through artificial means. And their future actions were impacted by those false memories.

These results, both the implanting of false information and the ability to read that information, exist in preliminary stages. The sorts of complex narrative information needed to create a convincing virtual existence still linger in the distance. Their shadows, however, the first warnings of their future potential, are apparent in current technology.

We can trust that scientists have our best interests at heart, and why wouldn't they? Our interests are their interests, after all. The same technology could be used to save people from post-traumatic stress disorder or paralyzing anxiety. We could modify personal experience such that each of us lives happier and more fulfilling lives.

Still, while these technologies are in their infancy, they're opening doors which, if they were bent toward nefarious intentions, could construct an entirely false reality for us to live inside. Once that happens, once we can no longer trust the veracity of our own experiences, anything is possible.