Create a free profile to get unlimited access to exclusive videos, sweepstakes, and more!

Letting robots imagine themselves could lead to self-awareness

We might finally find out what androids dream of.

Our science fiction is filled with stories of self-aware robots. Some of them are friendly like the inhabitants of Robot City in the 2005 animated film Robots (now streaming on Peacock!), others are less so. Despite our misgivings about the potential looming apocalypse which might present itself if we grant awareness to our machines, we can’t help but dream about building a new form of intelligent life with which to share our world.

To a certain extent, we’re already sharing our lives with intelligent programs. The digital assistant which lives inside your phone or smart home gadgets has a certain amount of awareness, allowing it to listen and respond to your voice commands. It’s awareness, however, is limited to what we’ve been able to feed it. It isn’t really aware or capable of learning in a meaningful way. At best, it takes information from humans and does a serviceable job of mimicking our speech behaviors.

Boyuan Chen and Hod Lipson, both from Columbia University, want to achieve truly self-aware machines, and to do so, they’re taking a wholly different approach, starting not with a machine mind, but with a body.

“It’s a philosophically deep question, whether or not you can be aware if you don’t have a body. What we’re trying to do is build something from the ground up, not by imitating human self-awareness but by developing a truly self-ware machine which will likely have a very different kind of awareness thana human would,” Lipson told SYFY WIRE.

Lipson and Chen, along with Robert Kwiatkowski and Carl Vondrick — also from Columbia — designed an experiment in which a robot could learn about its own body without receiving instruction from engineers. Their findings, recently published in the journal Science Robotics, represents the first time a robot has built a full-body model of itself from scratch.

They took their inspiration from nature, looking to the ways in which humans and non-human animals learn to use their bodies through trial and error. We build internal models of ourselves at a young age and maintain those models throughout our lives. As our bodies grow and change, so too does our internal understanding of ourselves. That sort of awareness is what researchers were hoping to achieve in a machine and, by all accounts, they did.

“During the modeling process we don’t assume anything about which part of the body will be important or how to visualize the robot. We let the robot model the entire morphology including wires and cables,” Chen said.

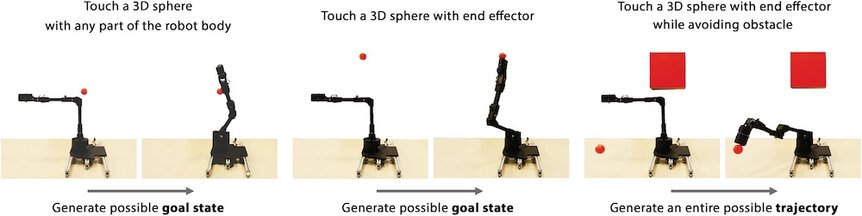

Rather than provide the robot with a totally accurate model of itself, as is typical in engineering, the team surrounded their robot with five live-streaming cameras to let it observe itself as it moved through the environment. After approximately three hours of wild, uninformed movements, the robot had built a workable model of itself which it could use to plan and execute actions, carrying out tasks and avoiding obstacles.

“A driverless car knows about the world in exquisite detail, but when it comes to itself, it’s been programed by engineers. It makes practical sense; the engineers know everything about the car so why not program it? But we want the autonomy that comes from self-awareness. Once a machine can learn about itself, it’s not tethered to its engineers anymore,” Lipson said.

Programming a machine with a perfectly accurate representation of its body works well only so long as that model remains accurate. If the robot loses a limb or breaks a motor, that model no longer works, and it loses the ability to effectively move and work in its environment. Aside from grander ambitions of granting robots with true sentience, that’s the primary advantage of self-learning robots. They can adapt as their bodies change, just like humans can.

“It can detect if a motor is broken or if part of the body is broken and it can tell you. To do that, it has to recognize what’s going on with its whole body. Then it can update its belief about what the body looks like. It accepts the fact that its body is broken and can update its self-model to match reality, then see if it can come up with a new plan to remain functional,” Chen said.

At present, their robot has a good working knowledge of its body in space but little else. Lipson noted that the level of complexity and richness of its awareness pales in comparison to human experience, but that their work represents a step along the path which he believes will ultimately result in fully self-aware machines.

“In the long-term we want to model not just the body but also the mind. We want the robot to not only imagine its body in the future but also imagine its own behavior in the future. That’s thinking about thinking and that’s much harder. It’s the Holy Grail,” Lipson said.

If this work progresses the way Lipson and the team believe it will, we’re headed for a future which resembles our fiction in one way or another. Whether our robotic neighbors reach out their mechanical hands in friendship or aggression remains to be seen, but rest assured they’ll know exactly where they are and what they’re doing.