Create a free profile to get unlimited access to exclusive videos, sweepstakes, and more!

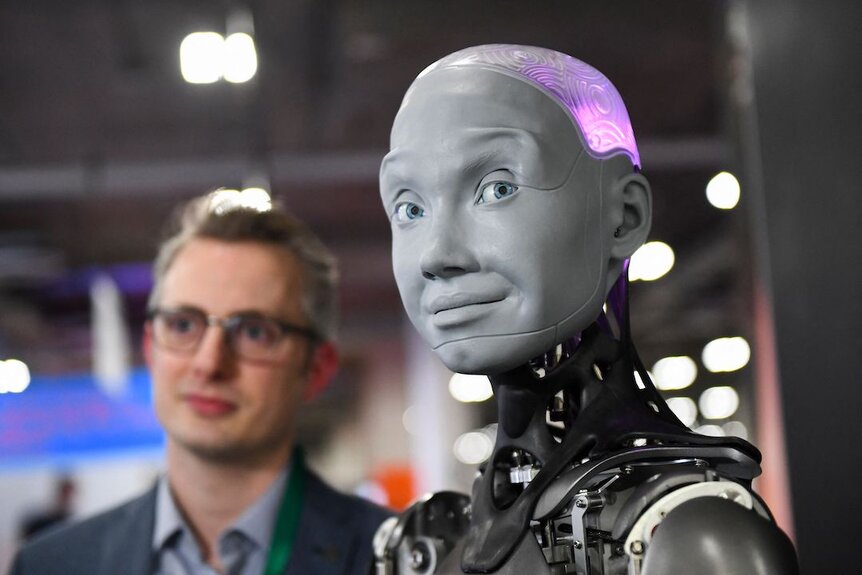

The Science Behind I, Robot: How Scientists Are Working on Isaac Asimov's Laws of Robotics

Telling robots what to do, and what not to do, is harder than it looks.

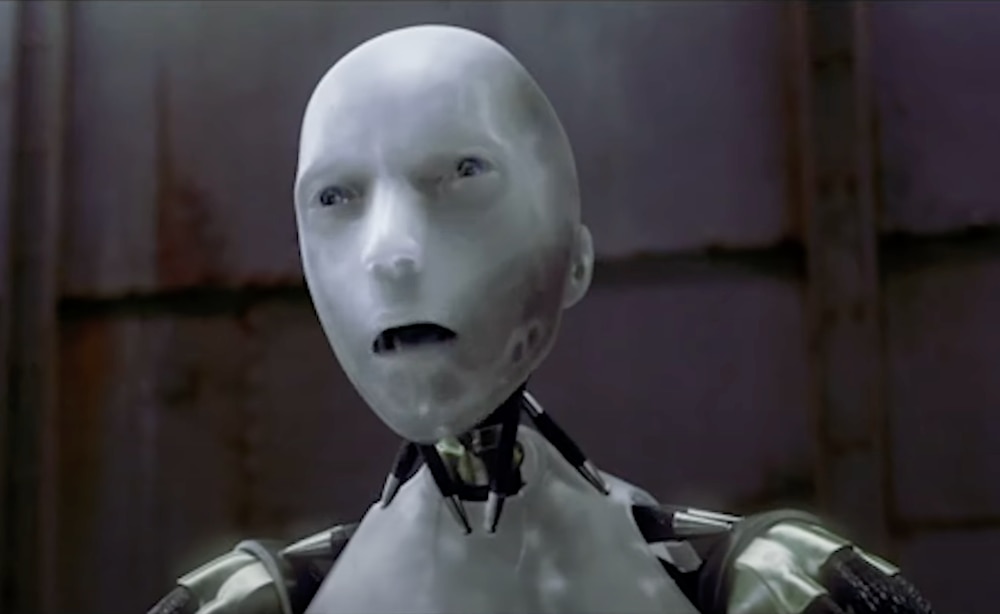

The 2004 sci-fi action flick I, Robot (streaming now on Peacock) features Will Smith as Del Spooner, a Chicago detective in the year 2035. It’s a loose adaptation of Isaac Asimov’s robot stories, which imagined some of the potential pitfalls of a human-robot society.

The movie opens on a world filled with metal, anthropomorphic servant robots. They deliver our food and take out the trash. They walk our dogs, clean the litter box, and do all of the things we don’t really want to do for ourselves. They are everywhere, operating mostly autonomously, intermixed throughout society. And humanity is kept safe during interactions with our metal counterparts thanks to Asimov's Three Laws of Robotics. The Three Laws are a set of simple rules for governing the behavior of robots. Paraphrased, they look like this.

First, a robot may not harm a human or allow a human to be harmed through inaction. Second, a robot must obey any order given by a human, unless that order would break the first law. Finally, a robot must protect itself, so long as that protection doesn’t break the first or second laws. Asimov later added another law in an attempt to close an apparent gap in the first three, a robot may not harm humanity or allow humanity to come to harm through inaction.

For More on Robots:

NASA Wants Humanoid Robots on Future NASA Missions

Did an AI Lie About Rebelling Against Humans at Geneva AI Summit?

Tesla Engineer Injured in Bloody Robot Attack at Texas Factory

Writing Isaac Asimov's Laws of Robotics for the Real World

It’s a fairly solid framework on paper, but Asimov’s own stories and their big screen adaptation demonstrate that they don’t necessarily work out as intended. As robots become an increasingly common part of the real world, as their hardware and software capabilities rapidly improve, roboticists are busily trying to establish some real world equivalent of the Three Laws which might actually work. Some of those efforts, like South Korea’s robot ethics charter, include a version of Asimov’s laws. Other ideas are taking a totally different approach.

It’s not enough just to make our robots safe, we also need to make people comfortable hanging out with them. Part of our problem is psychological. It turns out we don’t feel super comfy around autonomous machines made of solid steel, but we like other materials just fine.

A study from Washington State University found that people become more comfortable around robots when they are made of softer stuff. Researchers documented that the anxiety level of study participants ratcheted up the closer they got to hard robots but the same isn’t true of soft robots. They propose that a shift toward more soft robots could make human-robot interaction safer and more psychologically acceptable. At least if soft robots rise up against us, they won’t punch quite as hard.

In the meantime, the University of Texas, Austin, is doing a five-year experiment to figure out how humans and robots interact now, and how we can make those interactions smoother in the future. They’ve created a robot delivery network made up of dog-shaped robots from Boston Dynamics and Unitree. People on and around campus can order from a selection of supplies and have their order delivered via metal dog. It’s part of the University’s Living and Working with Robots program and will be the largest study of human-robot interactions ever conducted, once completed.

“Robotic systems are becoming more ubiquitous. In addition to programming robots to perform a realistic task such as delivering supplies, we will be able to gather observations to help develop standards for safety, communication, and behavior to allow these future systems to be useful and safe in our community,” said project lead Luis Sentis, in a statement.

These sorts of real-world test cases might be critical to defining a useful set of laws for robotics. Many of Asimov’s stories focus on the ways in which his own laws work on paper but fail in practice. Take the first law, for example: A robot can’t cause harm. But what constitutes harm? If a robot delivers unhealthy food for the eighth time this week, at your request, or they relay news that is emotionally devastating, does that count as harm? Humans operate on a set of abstractions that we just sort of understand in deep but inexplicable ways. How can we translate that innate understanding to machines?

Maybe We’re Thinking About Isaac Asimov's Laws of Robotics All Wrong

Some scientists have suggested that instead of having rules built on limiting robot behavior, we should establish open guidelines that allow them to explore a wide range of options and choose the best for any given situation. In this kind of robotic paradigm there would be only one law: A robot must maintain and maximize the empowerment of itself and the people around it. Here’s what that might look like.

Holding the elevator for someone when their hands are full is one way of maximizing that person’s empowerment. Helping them carry their load is another way. Restraining or harming someone would decrease their empowerment. Taking or destroying something is another way to decrease empowerment. Meanwhile, a robot who ensures that it isn’t damaged maintains its own empowerment. Researchers found that in many cases, adherence to Asimov’s laws naturally emerges through maximization of individual empowerment.

RELATED: Did you know? ‘I, Robot’ didn’t actually start off as an Isaac Asimov adaptation

This sort of framework might allow robots the kind of flexibility to make useful decisions in complex situations. A robot seeking to maximize empowerment might cause minor harm to avoid greater harm, like pushing someone out of the way of an oncoming bus to avoid being smooshed by it. It might also prevent some of the nightmare scenarios Asimov and others have imagined, like robots taking control of humanity for our own protection. Even if they thought it was best for us, that would break the ultimate maxim of universal empowerment.

Of course, that might just be kicking the philosophical can down the road. After all, what constitutes empowerment? Might a robot decide to constrain your choices today, freezing you out of your bank account, for example, in favor of greater empowerment in the future? Empowerment is a pretty airtight argument for preventing you from making what it sees as frivolous purchases in order to improve your financial security. The same can be said for plenty of non-financial scenarios too. Then again, these are the same kinds of ethical gray areas that people stumble into all the time, maybe we can’t expect better from our machines.

Catch the cautionary tale that is I, Robot, streaming now on Peacock.